Table of Contents >> Show >> Hide

- The real question behind the question

- The “human” doctor: empathy, trust, and moral judgment

- The “animal” doctor: instincts, pattern recognition, and survival mode

- The “robot” doctor: protocols, technology, and algorithmic guardrails

- So… are doctors humans, animals, or robots?

- What patients can do to bring out the best “human + tools” version of medicine

- What healthcare systems can do so doctors don’t have to cosplay as robots

- Experiences: When doctors feel human, animal, and robot (often in the same hour)

- SEO Tags

If you’ve ever sat on an exam table in a paper gown (aka the least flattering fashion choice in history),

you’ve probably had a moment where your doctor felt… not entirely human. Maybe they were warm and

reassuring. Maybe they were brisk and hyper-focused. Maybe they spent more time staring at a computer

screen than your face. And maybejust maybeyou wondered: Are doctors more like humans, animals, or robots?

It’s a funny question on the surface, but it’s also a surprisingly useful one. Doctors are asked to do

something that’s borderline magical: combine compassion with precision, speed with accuracy, and

scientific evidence with the messy reality of real life. They’re expected to be endlessly patient, never

miss a detail, and deliver perfect decisions while juggling alarms, paperwork, and people. In other words:

we want them to be human… but with robot-level stamina… and animal-level instincts.

The truth is, doctors can look like all three depending on the moment, the setting, and the system they’re

working in. Let’s break it downplayfully, but with real-world evidenceso you can understand what’s

happening on the other side of the stethoscope.

The real question behind the question

When people compare doctors to robots, they’re usually reacting to efficiency: fast visits, scripted

questions, and the sense that healthcare is becoming a conveyor belt. When they compare doctors to

animals, they’re noticing instinct: rapid pattern recognition, gut feelings, and snap judgments. And when

they compare doctors to humans, they’re noticing the obviousbut also the most important part: empathy,

uncertainty, emotion, and moral responsibility.

Here’s the twist: modern medicine requires all three. Not equally. Not all the time. But

often in the same daysometimes in the same patient encounter.

The “human” doctor: empathy, trust, and moral judgment

A human doctor isn’t just “nice.” Human-ness in medicine is a clinical tool. It builds trust, improves

communication, and helps patients share details they might otherwise hide (“I swear I’m taking the

medication…” while the unopened bottle is basically still shrink-wrapped).

Empathy is not fluffit’s part of the work

Empathy helps doctors understand what matters to a patient: pain tolerance, fear, cultural beliefs, family

pressures, finances, and the everyday obstacles that determine whether a treatment plan is realistic. A

“perfect” plan that a patient can’t follow isn’t perfectit’s just paper.

Many U.S. medical schools use standardized patients (trained actors) to teach students how

to deliver bad news, handle sensitive topics, and communicate clearly under pressure. That training exists

because empathy and communication skills can be learned and strengthened, not just wished into existence.

Human judgment means dealing with uncertainty

Medicine rarely offers 100% certainty. Symptoms overlap. Tests can mislead. Two reasonable clinicians can

interpret the same situation differently. That’s not because doctors are careless; it’s because biology is

chaotic and humans don’t come with a “Check Engine” dashboard (though honestly, that would be convenient).

Human doctors weigh not only “What is medically possible?” but also “What is best for this person?”

That involves values: quality of life, risk tolerance, time, family responsibilities, and personal goals.

Algorithms can help, but they don’t carry moral responsibility. Humans do.

The “animal” doctor: instincts, pattern recognition, and survival mode

Before we insult anyone’s lineage, let’s clarify what “animal” really means here: fast, adaptive, pattern-based

decision-making. In clinical settings, that can be brilliant. It can also be risky.

Fast thinking vs slow thinking: why “gut feelings” exist

Research on clinical reasoning often describes two modes of thinking. One is fast and intuitive (pattern

recognition). The other is slower and analytical (careful, step-by-step reasoning). Experienced clinicians

often switch between them. That “I’ve seen this before” instinct can be lifesavingespecially in emergency

situations where time matters.

But fast thinking can also bring cognitive shortcuts. In medicine, those shortcuts can lead to missed

diagnoses, delayed treatment, or tunnel visionespecially when a case is unusual, when symptoms are vague,

or when the clinician is tired and overloaded.

Cognitive bias: the animal brain’s greatest party trick

Cognitive biases are predictable errors in judgment that happen when humans make decisions quickly.

Healthcare researchers and patient safety experts have linked multiple types of cognitive bias to diagnostic

mistakes and management errors. The point isn’t to shame doctorsit’s to acknowledge the reality of how human

brains work under pressure.

Common examples include:

- Anchoring: locking onto the first explanation and ignoring new clues.

- Availability bias: overestimating what you’ve seen recently (“Everyone has the flu lately…”).

- Confirmation bias: noticing facts that support your hunch and downplaying the rest.

- Premature closure: stopping the search too early because the answer feels “good enough.”

Healthcare systems try to counter these instincts with checklists, second opinions, decision-support tools,

and a culture that welcomes questions. The best clinicians don’t pretend bias is impossible; they build

habits to reduce it.

The “pack” factor: teamwork, hierarchy, and handoffs

Humans are social animals, and hospitals are basically high-stakes anthills with pagers. The reality of

modern care is that it’s often delivered by teams: physicians, nurses, pharmacists, techs, therapists, and

others. That’s goodteams catch errors and share expertise. But teamwork only works when communication is

structured and safe.

Tools like SBAR (Situation, Background, Assessment, Recommendation) exist to make communication

clear during handoffs and urgent conversations. When used well, structured handoffs can reduce confusion,

improve patient safety, and help teams act quickly.

The “robot” doctor: protocols, technology, and algorithmic guardrails

If doctors sometimes feel robotic, it’s partly because medicine has become more standardizedfor good reasons.

Standardization reduces preventable harm. It also helps ensure that patients get consistent, evidence-based care

instead of a “wild west” of personal opinions.

Guidelines and checklists aren’t soullessthey’re safety rails

Evidence-based guidelines exist because humans forget things, get distracted, and misjudge probabilities.

Checklists are famously useful in aviation and have been adopted in healthcare to reduce preventable errors.

No one wants “creative improvisation” during a wrong-site surgery risk. That’s not artistry; that’s a lawsuit

waiting to happen.

Antibiotic stewardship is another example. Overprescribing antibiotics contributes to antibiotic resistance

and avoidable side effects. Public health agencies have reported that a significant share of antibiotic

prescriptions in outpatient settings are unnecessary. Stewardship programs and clinical guidelines are

designed to help clinicians prescribe antibiotics only when they’re likely to help.

The electronic health record: why the computer gets so much eye contact

Many clinicians dislike how much time documentation takes, but documentation is tied to safety, continuity,

and reimbursement. Electronic health records (EHRs) can improve access to informationbut they can also create

burdens through inbox messages, alerts, and after-hours charting. Studies and national reports have linked EHR

workload and usability problems to clinician stress and burnout.

Burnout isn’t just “being tired.” It’s associated with emotional exhaustion, depersonalization, and reduced

sense of accomplishment. National physician surveys have shown burnout symptoms remain common among U.S.

physicianseven when rates improve compared to earlier years. When a system pushes clinicians to function

like machines, the human cost shows up eventually.

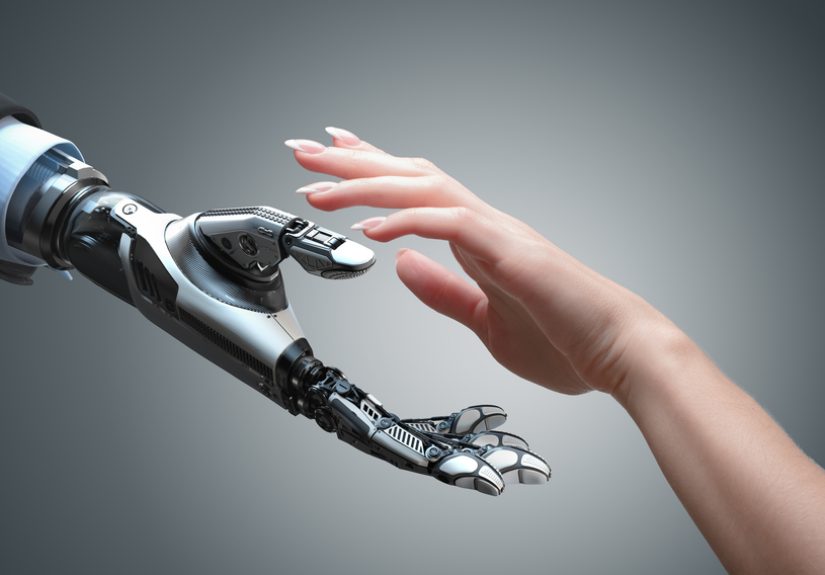

AI and clinical decision support: robots assisting humans (not replacing them)

Clinical decision support (CDS) tools can flag drug interactions, remind clinicians about preventive care,

and help interpret risk. More advanced AI can analyze medical images, identify patterns in data, or suggest

possibilities to consider.

In the U.S., regulators have published guidance and information on software used in medical contexts,

including AI-enabled software that can function as a medical device in certain situations. That oversight

matters because an AI tool is only as reliable as the data it learned fromand real-world medicine is messy.

Bias can show up in data. Rare conditions can be missed. And a confident-sounding output can mislead busy

humans if it’s treated like an oracle.

The healthiest framing is this: AI should be a co-pilot, not the captain. Clinicians are

responsible for context, values, and accountability.

Robotic surgery: the robot has arms, but the surgeon has the job

Robot-assisted surgery is a great example of how “robotic” doesn’t mean “automatic.” In robot-assisted

procedures, a surgeon controls instruments through a console; the robot doesn’t decide what to cut or when.

Medical references for patients emphasize that robotic surgery has benefits like smaller incisions and

potentially faster recovery, but it also carries risks similar to other surgical approaches.

Ethical and safety discussions around robotic-assisted surgery highlight a real-world issue: the technology

can change team dynamics, increase complexity, and require extra communication so safety doesn’t slip. So even

in the most futuristic operating room, human teamwork still decides whether care is excellent or chaotic.

So… are doctors humans, animals, or robots?

The honest answer is: doctors are humans who use animal-like instincts and robot-like tools

to do a job that no single mode can handle alone.

Here’s a practical way to think about it:

- Human is for connection: listening, explaining, building trust, and making value-based decisions.

- Animal is for speed: pattern recognition, intuition, and real-time adaptation under pressure.

- Robot is for reliability: checklists, protocols, reminders, documentation, and decision-support tools.

Problems happen when one mode crowds out the others:

- If “robot” dominates, care can feel cold, rushed, and transactionaland burnout rises.

- If “animal” dominates, bias and snap judgments can increase risk.

- If “human” dominates without guardrails, variability and inconsistency can creep in.

The goal isn’t to pick a winner. The goal is balance: compassionate care supported by evidence and safety systems,

delivered by teams that respect both human limits and human dignity.

What patients can do to bring out the best “human + tools” version of medicine

You shouldn’t have to manage a healthcare system like it’s a part-time job. But small moves can improve

communication and reduce mistakesespecially in rushed settings.

Bring clarity (so nobody has to guess)

- Write down symptoms, when they started, and what makes them better or worse.

- Bring a medication list (including supplements).

- If you’ve had tests or visits elsewhere, bring summaries when possible.

Ask “What else could it be?” (without sounding like a detective show)

This question nudges analytical thinking without accusing anyone of being wrong. It invites your clinician to

consider alternatives and explain their reasoning.

Repeat back the plan

A quick “So the plan is X, and I should watch for Y, and call if Z happensright?” can catch misunderstandings

instantly. Clear communication protects everyone.

Note: This article is educational and not a substitute for professional medical advice. If you have urgent

symptoms, seek immediate care.

What healthcare systems can do so doctors don’t have to cosplay as robots

If we want doctors to show up as their best human selves, the system has to stop treating them like endlessly

rechargeable batteries.

- Reduce EHR burden: improve usability, cut unnecessary clicks, and support team-based documentation.

- Support clinician well-being: realistic staffing, protected time, and mental health resources.

- Strengthen diagnostic safety: feedback loops, second-look pathways, and a culture that welcomes uncertainty.

- Use AI responsibly: transparent evaluation, monitoring for bias, and clear accountability.

- Improve handoffs: structured communication tools and training that makes teamwork safer.

In other words, the future of medicine shouldn’t be “replace humans.” It should be “build systems that let humans

practice medicine like humansbacked by smart tools.”

Experiences: When doctors feel human, animal, and robot (often in the same hour)

To make this question feel real, imagine a few scenes you’ve probably seenor livedwithout realizing how much

is happening behind the curtain.

1) The waiting room handshake that changes everything

A patient walks in with vague symptoms: fatigue, headaches, “just not feeling right.” The doctor could act like a

robotrun a checklist, order labs, move on. But instead, they start human: “What’s been going on in your life lately?”

The patient pauses, then admits they’ve been sleeping three hours a night because they’re caring for a family member,

working extra shifts, and living on coffee and snack crackers.

That one human question reshapes the medical picture. Now the plan isn’t just tests; it’s sleep, stress, support,

and realistic steps. The science is still there, but the treatment becomes a partnership instead of a printout.

2) The animal instinct in the hallway

In a busy clinic, a nurse mentions a patient “doesn’t look right.” No fancy description, no perfect datajust a

gut signal. The physician switches into animal-mode pattern recognition: skin tone, breathing effort, posture,

confusion. Their brain is doing fast sorting in the background: “This could be serious.”

That instinct is useful. But good clinicians don’t stop there. They pivot into slow, careful thinkingvital signs,

history, focused exam, targeted tests. Instinct opens the door; analysis checks the locks.

3) The robot moment that protects you

Later, the doctor prescribes a medication. The EHR pops up an alert: potential interaction with something the

patient takes. The doctor sighs (because alerts can be annoying), but this time it matters. They double-check,

adjust the plan, and avoid a preventable side effect. That’s the robot side doing what it’s supposed to do:

catching what tired humans can miss.

4) The operating room: the robot has the arms, the team has the safety

In a robot-assisted surgery, the surgeon sits at a console, controlling tiny instruments with extreme precision.

It looks futuristicand it is. But what makes it safe isn’t the robot’s hardware; it’s the humans coordinating:

nurses confirming instruments, anesthesiology monitoring vital signs, the team using structured communication when

something changes. The technology increases capability, but it also increases complexity, so teamwork becomes even

more essential.

5) The burnout edge: when humans get treated like machines

Now picture the end of the day: the clinic is over, but the inbox isn’t. Messages, refill requests, prior

authorizations, documentationhours of quiet, invisible work. This is where “robot expectations” can eat away at

the human core of medicine. When clinicians are pressured to move faster and document more, patients feel the

consequences: shorter visits, less eye contact, and that “why do I feel like a ticket number?” vibe.

And here’s the part worth saying out loud: that coldness is often not a personality flawit’s a system symptom.

Many clinicians still care deeply. But caring deeply while running on empty is how compassion fatigue shows up.

So if you’ve ever felt like your doctor was part robot, part exhausted mammal, and part caring human… you weren’t

imagining it. Medicine today often demands all three. The best outcomes happen when tools support the humans,

instincts are checked by reflection, and the system makes room for actual conversationnot just clicking “Next.”