Table of Contents >> Show >> Hide

- Why cameras are suddenly in the trust business

- The big shift: provenance beats vibes

- What camera makers are doing right now

- So… will this actually stop AI fakes?

- How verification could look in the real world

- What needs to happen next for this to work at scale

- The honest bottom line

- Experiences and Lessons from the “Prove It” Era (Extended)

We’re living in the era where a picture is worth a thousand words… and also worth a thousand skeptical comments like

“nice AI bro.” The internet didn’t just lose trust in politicians, ads, and your cousin’s “natural” glow-upit’s now

side-eyeing photos themselves. If everything can be generated, edited, and remixed in seconds, how do you

prove an image came from a real camera at a real moment in time?

Camera makers (and the wider tech world around them) are trying to answer that question with something that sounds

boring but could be genuinely huge: cryptographic provenance. In plain English, the idea is to let a

camera “sign” a photo at the moment it’s captured, creating a tamper-evident trail that can be checked later. Think

of it like a digital receipt for realityone that’s harder to fake than a “Shot on my phone” watermark slapped onto a

suspiciously perfect image of a unicorn eating ramen.

Why cameras are suddenly in the trust business

For decades, photography was already a balance between “captured” and “crafted.” Cropping, color grading, dodging and

burningnone of that is new. What’s new is the speed and scale of synthetic images (and the fact that they can look

indistinguishable from authentic photos at a glance). That creates a practical problem:

- Newsrooms need to prove images are legitimate before publishingand defend them afterward.

- Brands need to show marketing visuals aren’t deceptive or manipulated beyond disclosure.

- Creators need credit and protection against reposts and misattribution.

- Everyone else needs a way to tell “this is real” without becoming a full-time forensic analyst.

AI detectors that “guess” whether an image is generated aren’t enough. They can be wrong, and they’re locked in a

never-ending arms race with better generators. Camera makers are betting on a different strategy: don’t try to

detect the fakeprove the real.

The big shift: provenance beats vibes

The key concept here is content provenance: a record of where something came from and what happened

to it. Instead of asking, “Does this look like AI?” provenance asks, “Can you show me a trustworthy history of this

filestarting at capture?”

Meet C2PA and Content Credentials (the “nutrition label” approach)

A major effort behind this is the C2PA (Coalition for Content Provenance and Authenticity) and the

broader ecosystem often labeled Content Credentials. The basic idea is that media can carry

tamper-evident metadata describing key facts: capture source, edits, and other provenance details.

When implemented well, it’s not just a text note buried in a fileit’s a cryptographically protected set of

information that can be verified.

If you’ve ever checked an ingredient list, you’ll get the vibe. Content Credentials aim to answer questions like:

- Was this captured by a camera or generated by software?

- Which device created it, and when?

- Has it been editedand if so, how?

- Is there a verifiable chain of custody from capture to publication?

How the “signature” part works (without a cryptography headache)

Here’s the simplified version. A camera (or a trusted system) creates a cryptographic “fingerprint” of the image and

signs it using keys that are hard to compromise. If someone changes the image in a way that breaks the expected

record, verification tools can flag that the credentials don’t match.

Importantly, this doesn’t mean “no editing allowed.” It means the system can record edits as part of the story.

Legit color correction? Finerecord it. Cropping? Finerecord it. Turning a photo into a dragon-filled fantasy matte

painting? Also finejust don’t claim it’s straight documentary capture.

What camera makers are doing right now

Different brands are rolling out authenticity features in different ways, but the most serious approaches share one

common theme: credentials at capture. The earlier you create the trust signal, the less room there

is for “mystery meat media” to sneak in.

Sony’s newsroom-focused authenticity: verification built for speed

Sony has been pushing a robust authenticity workflow aimed at news organizations. The concept: the camera embeds

verifiable authenticity information at capture, and then authorized parties can verify it later through Sony’s

validation tools. Sony has also discussed adding additional signals beyond basic metadatasuch as proprietary depth

informationintended to strengthen confidence that an image came from a real scene rather than a synthetic pipeline.

What makes this approach especially relevant to journalists is the emphasis on third-party verification.

If a newsroom publishes a photo and gets challenged (“fake!”), the newsroom can point to a verification result that

is designed to be shareable and checkable. That matters because authenticity isn’t only a technical problemit’s a

social one. People don’t just want proof; they want proof they can understand quickly.

Leica and the “first camera with Content Credentials” moment

Leica made headlines by baking Content Credentials into a flagship model and framing it as a direct response to the

AI era’s trust crisis. The positioning is clear: the camera becomes a source of truth at capture, with credentials

integrated into the photography workflow rather than bolted on later. Whether you shoot street photography, portraits,

or documentary work, Leica’s message is basically: “You shouldn’t need to become a digital detective to prove your

image is yours and is real.”

Is it also a very Leica move to make trust a luxury feature? Absolutely. But the bigger point is adoption: once one

manufacturer proves it’s possible, it pressures the rest of the market to treat provenance as a standard feature,

not a niche add-on.

Nikon’s authenticity push: a service built for professional trust

Nikon has introduced an authenticity service designed to help protect photographers and news organizations from

AI-driven misinformation and fabricated imagery. The key emphasis is the ability for select Nikon cameras to add

secure Content Credentials to captured photosagain, reinforcing the “trust at capture” strategy.

For professionals, this isn’t just about dunking on deepfakes. It’s about workflow confidence:

editors can prioritize images with verifiable capture credentials, and publishers can better defend the legitimacy of

what they share.

Canon and the slow-but-steady march toward C2PA

Canon has also been moving toward C2PA-style content authenticity support, with reporting indicating C2PA features

arriving for certain flagship models via updates. That’s an important trend: authenticity isn’t only “new camera

hardware.” Increasingly, it’s a mix of firmware, software support, and compatibility across publishing pipelines.

Translation: the future of “pro” cameras may include not just faster autofocus and better stabilization, but also

a built-in ability to say, “This file has receipts.”

So… will this actually stop AI fakes?

Here’s where we need to be honest (without being doom-and-gloom). Authenticity tech can be powerful, but it’s not a

magic shield. It solves a specific problem extremely well: proving that a given file came from a trusted

capture device and has a verifiable history. That’s huge for journalism, courts, insurance, and any context

where provenance matters.

But it does not automatically solve these problems:

-

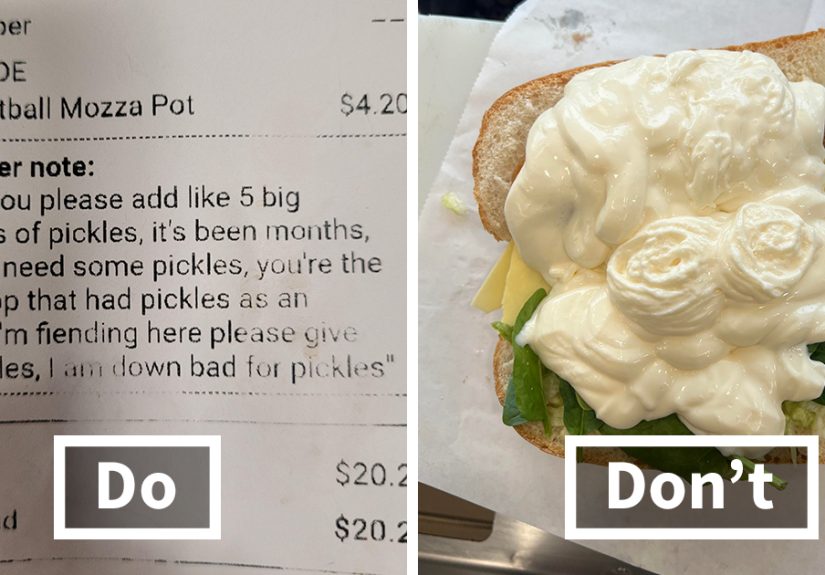

“Real photo, misleading story.” A signed photo can still be used with a false caption or wrong context.

Provenance can’t stop bad-faith framing. -

“The screenshot problem.” If someone screenshots an image, reposts it, or strips metadata, the

credentials may not travel with it. -

“Adoption gaps.” If platforms, apps, and social networks don’t preserve or display credentials,

the trust signal gets lost in transit. -

“Privacy and safety tradeoffs.” Provenance can include sensitive info (like location). Systems need

smart controls so safety isn’t sacrificed for transparency.

The best way to think about it: authenticity signatures won’t eliminate fakes, but they can make

verifiable truth easier to distributeand that’s a major upgrade over the current internet standard,

which is basically “argue in the comments until everyone gets tired.”

How verification could look in the real world

Let’s walk through a practical scenariobecause this stuff can feel abstract until you imagine the actual pressure

of a deadline.

Example: a photojournalist covering breaking news

-

Capture: The photographer shoots an image on a supported camera with authenticity enabled.

The camera embeds a signature/credentials at capture. -

Transfer: The file moves to the newsroom. Ideally, the pipeline preserves credentials rather than

accidentally stripping them. -

Edit (allowed): The editor crops and adjusts exposure for clarity. Those edits can be recorded in

the credential history, rather than hidden. -

Publish: The newsroom posts the image on a platform that supports credentials displayor links to

a verification page when needed. -

Challenge: Someone claims it’s fake. The newsroom provides verification results that confirm it was

captured on a real device with intact credentials.

That final step matters. Authenticity tech is partly about truth, but it’s also about

defensibility. In an attention economy, being right isn’t always enoughyou have to be able to show

why you’re right quickly, clearly, and publicly.

What needs to happen next for this to work at scale

We’re early in the adoption curve. For authenticity tech to become as normal as EXIF data used to be, a few things

have to line up:

1) Platforms must preserve credentials

If social networks and hosting pipelines strip metadata, provenance collapses. The ecosystem needs consistent support

so credentials survive common actions like resizing, CDN delivery, and republishing.

2) Viewers need simple, human-friendly indicators

A cryptographic signature is only useful if people can see and understand it. That’s why “pins,” badges, or

interactive inspection tools matter: they translate technical trust into something readable.

3) Standards must stay open and interoperable

If every camera company builds its own closed authenticity silo, trust becomes fragmented. Open standards like C2PA

are meant to prevent that by creating a common language for provenance across devices and platforms.

4) Workflows must be fast

The news doesn’t wait for a verification committee meeting. For journalists, authenticity tools must be

nearly invisiblesimple to enable, quick to validate, and reliable under pressure.

The honest bottom line

“Prove this photo isn’t AI” is a tall orderespecially on an internet that treats confidence like a renewable

resource (it’s not). But camera makers and industry partners are finally building a practical answer:

capture-time credentials that can be verified later. It won’t end misinformation, but it can raise

the cost of deception and make real journalism easier to defend.

In other words: the camera is learning a new skill. Not just taking pictureskeeping receipts.

Experiences and Lessons from the “Prove It” Era (Extended)

If you’ve spent any time around photographers, editors, or anyone who has ever tried to upload a perfectly normal

image to the internet, you know the emotional journey:

Capture → Share → Get accused of faking it → Consider moving to a cabin with no Wi-Fi.

Authenticity tech is arriving in the middle of that chaos, and the lived experience around it is already changing how

people shoot, publish, and even argue online.

One common experience in news and documentary workflows is the “verification tax.” It’s the invisible time cost that

appears whenever something feels too important to get wrong. A photo comes in from the field and everyone pauses:

Where did this come from? Can we trust it? Who touched it? In the past, that might involve phone calls,

raw-file requests, reverse image searches, and a lot of gut instinct. With capture-time credentials, the goal is to

replace some of that scramble with a quick check that answers basic questions immediately. The difference isn’t just

technicalit’s psychological. When you can verify provenance fast, you can spend more energy on the story and less on

defensive forensics.

Another real-world pattern: people don’t only want authenticity; they want shareable proof. In

practice, that means a credential system needs a way to show verification results in a format that survives the way

the internet actually behavesgroup chats, reposts, screenshots, “my friend sent me this” chains, and platform

compression. When a newsroom can point to a verification result, it gives editors something concrete to stand on.

It won’t convince every bad-faith commenter, but it changes the tone of the conversation with reasonable skeptics:

the debate becomes “here’s the evidence,” not “trust me, bro.”

On the creator side, there’s a very human experience that keeps repeating: the frustration of being erased. A

photographer posts an image, it gets reposted without credit, then copied again, then edited, then turned into

“content,” and suddenly the original author is invisible. Content Credentials are partly about fighting fakes, but

they’re also about fighting amnesiamaking it easier for the internet to remember who made something and how

it evolved. Even if credentials don’t survive every platform perfectly, creators are increasingly drawn to anything

that makes attribution less fragile than a caption that can be cropped out in half a second.

There’s also a learning curve that feels very familiar to anyone who has adopted new camera features: at first,

authenticity settings can feel like another menu rabbit hole. People worry about slowing down capture, draining

batteries, complicating ingest, or exposing sensitive metadata. Early adopters tend to develop habitsturning

credentials on for assignments that might be contested, keeping them off for sensitive work, or using selective

controls to avoid sharing location data. This is where the tech has to mature: the best systems will make these

controls obvious and safe by default, so photographers don’t have to choose between transparency and protection.

A final experience worth mentioning is the cultural shift in what “proof” means. For years, audiences treated photos

as inherently persuasive. Now, the default stance is suspicion. That’s exhaustingbut it also creates space for a new

norm: verified media earns a kind of credibility badge, while unverified media becomes “interesting but unproven.”

In that world, authenticity tech doesn’t just help the people who use it; it helps set expectations for everyone.

It trains audiences to ask better questions, and it gives honest publishers a way to answer them quickly.

The bigger takeaway from all these experiences is simple: authenticity isn’t a single featureit’s an ecosystem.

Cameras can sign images, but the real “win” happens when the entire path from capture to consumption preserves and

displays that trust. We’re not all the way there yet. But for the first time in a long time, the industry isn’t

relying on wishful thinking or vibe-based detection. It’s building a system where truth can travel with the file.

And honestly? In 2025, that feels like a small miraclecryptography wearing a camera strap.